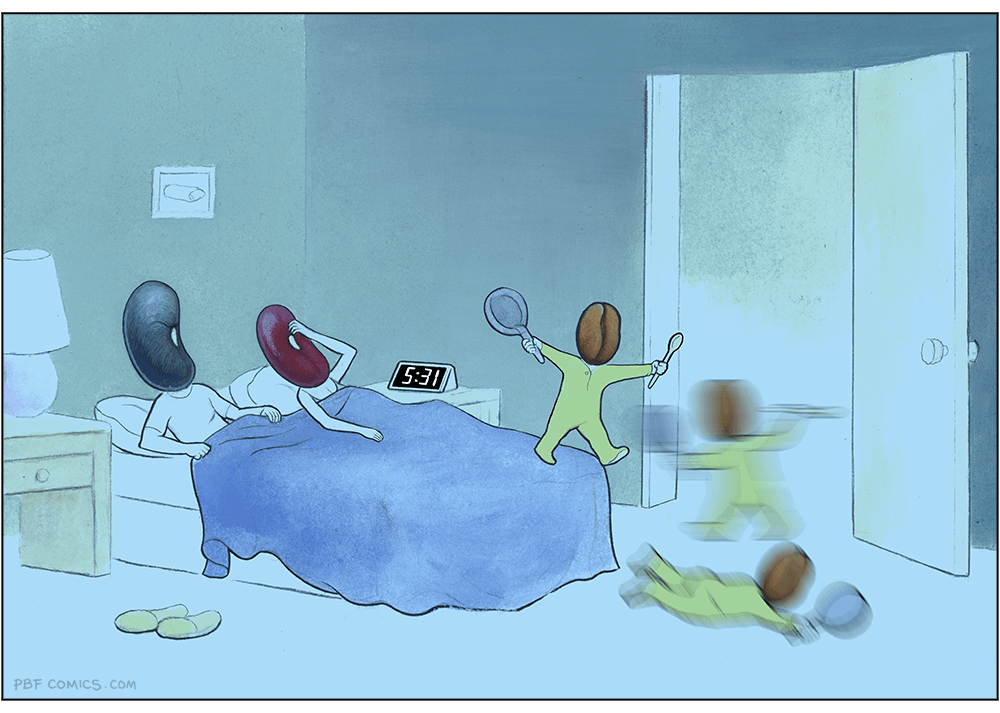

We coined a new term on the Oxide and Friends podcast last month (primary credit to Adam Leventhal) covering the sense of psychological ennui leading into existential dread that many software developers are feeling thanks to the encroachment of generative AI into their field of work.

We're calling it Deep Blue.

You can listen to it being coined in real time from 47:15 in the episode. I've included a transcript below.

Deep Blue is a very real issue.

Becoming a professional software engineer is hard. Getting good enough for people to pay you money to write software takes years of dedicated work. The rewards are significant: this is a well compensated career which opens up a lot of great opportunities.

It's also a career that's mostly free from gatekeepers and expensive prerequisites. You don't need an expensive degree or accreditation. A laptop, an internet connection and a lot of time and curiosity is enough to get you started.

And it rewards the nerds! Spending your teenage years tinkering with computers turned out to be a very smart investment in your future.

The idea that this could all be stripped away by a chatbot is deeply upsetting.

I've seen signs of Deep Blue in most of the online communities I spend time in. I've even faced accusations from my peers that I am actively harming their future careers through my work helping people understand how well AI-assisted programming can work.

I think this is an issue which is causing genuine mental anguish for a lot of people in our community. Giving it a name makes it easier for us to have conversations about it.

My experiences of Deep Blue

I distinctly remember my first experience of Deep Blue. For me it was triggered by ChatGPT Code Interpreter back in early 2023.

My primary project is Datasette, an ecosystem of open source tools for telling stories with data. I had dedicated myself to the challenge of helping people (initially focusing on journalists) clean up, analyze and find meaning in data, in all sorts of shapes and sizes.

I expected I would need to build a lot of software for this! It felt like a challenge that could keep me happily engaged for many years to come.

Then I tried uploading a CSV file of San Francisco Police Department Incident Reports - hundreds of thousands of rows - to ChatGPT Code Interpreter and... it did every piece of data cleanup and analysis I had on my napkin roadmap for the next few years with a couple of prompts.

It even converted the data into a neatly normalized SQLite database and let me download the result!

I remember having two competing thoughts in parallel.

On the one hand, as somebody who wants journalists to be able to do more with data, this felt like a huge breakthrough. Imagine giving every journalist in the world an on-demand analyst who could help them tackle any data question they could think of!

But on the other hand... what was I even for? My confidence in the value of my own projects took a painful hit. Was the path I'd chosen for myself suddenly a dead end?

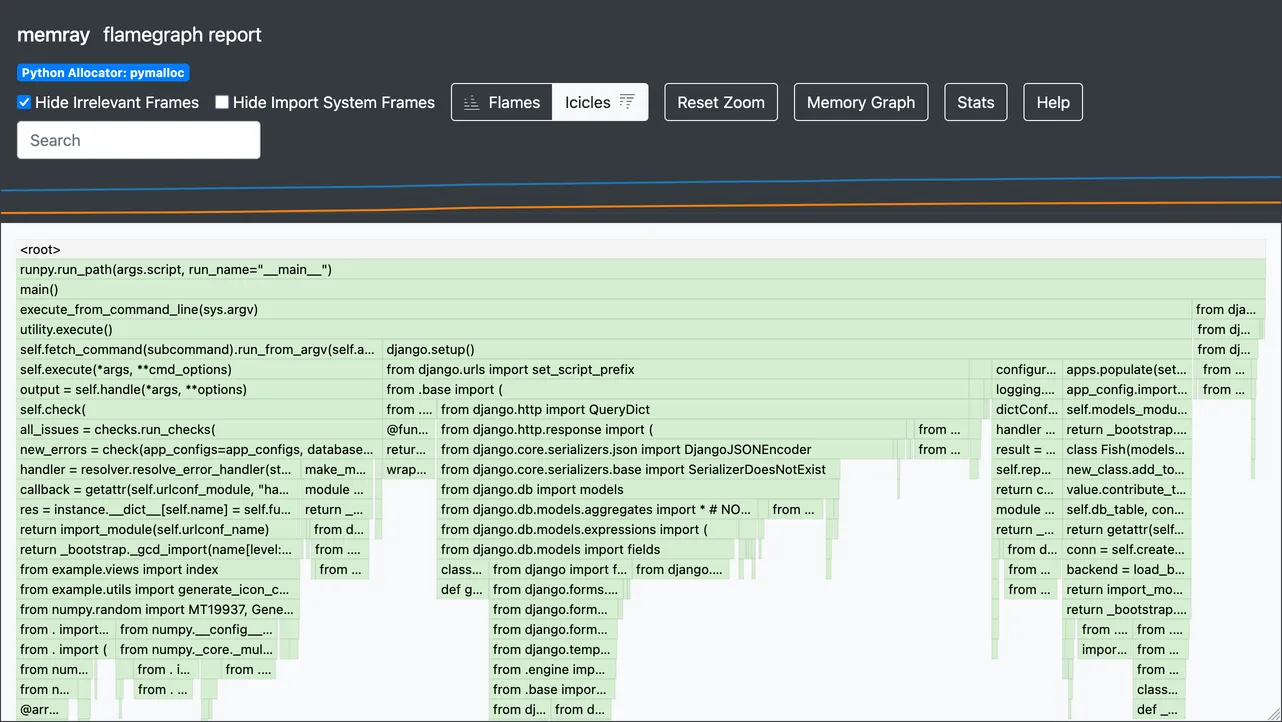

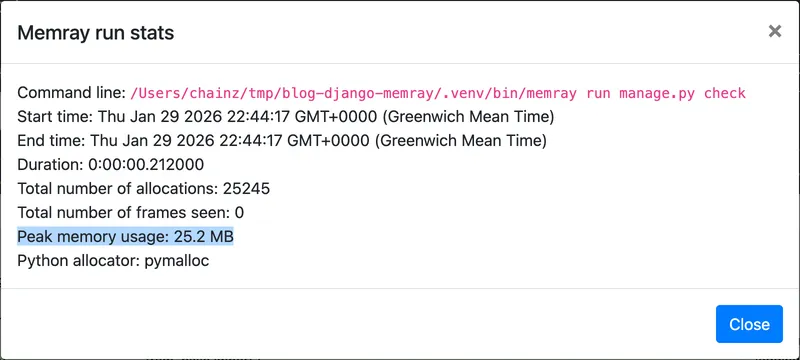

I've had some further pangs of Deep Blue just in the past few weeks, thanks to the Claude Opus 4.5/4.6 and GPT-5.2/5.3 coding agent effect. As many other people are also observing, the latest generation of coding agents, given the right prompts, really can churn away for a few minutes to several hours and produce working, documented and fully tested software that exactly matches the criteria they were given.

"The code they write isn't any good" doesn't really cut it any more.

A lightly edited transcript

Bryan: I think that we're going to see a real problem with AI induced ennui where software engineers in particular get listless because the AI can do anything. Simon, what do you think about that?

Simon: Definitely. Anyone who's paying close attention to coding agents is feeling some of that already. There's an extent where you sort of get over it when you realize that you're still useful, even though your ability to memorize the syntax of program languages is completely irrelevant now.

Something I see a lot of is people out there who are having existential crises and are very, very unhappy because they're like, "I dedicated my career to learning this thing and now it just does it. What am I even for?". I will very happily try and convince those people that they are for a whole bunch of things and that none of that experience they've accumulated has gone to waste, but psychologically it's a difficult time for software engineers.

[...]

Bryan: Okay, so I'm going to predict that we name that. Whatever that is, we have a name for that kind of feeling and that kind of, whether you want to call it a blueness or a loss of purpose, and that we're kind of trying to address it collectively in a directed way.

Adam: Okay, this is your big moment. Pick the name. If you call your shot from here, this is you pointing to the stands. You know, I – Like deep blue, you know.

Bryan: Yeah, deep blue. I like that. I like deep blue. Deep blue. Oh, did you walk me into that, you bastard? You just blew out the candles on my birthday cake.

It wasn't my big moment at all. That was your big moment. No, that is, Adam, that is very good. That is deep blue.

Simon: All of the chess players and the Go players went through this a decade ago and they have come out stronger.

Turns out it was more than a decade ago: Deep Blue defeated Garry Kasparov in 1997.

Tags: definitions, careers, ai, generative-ai, llms, ai-assisted-programming, oxide, bryan-cantrill, ai-ethics, coding-agents