Last summer, I wrote about the Homework Apocalypse, the coming reality where AI could complete most traditional homework assignments, rendering them ineffective as learning tools and assessment measures. My prophecy has come true, and AI can now ace most tests. Yet remarkably little has changed as a result, even as AI use became nearly universal among students.

As of eight months ago, a representative survey in the US found that 82% of undergraduates and 72% of K12 students had used AI for school. That is extraordinarily rapid adoption. Of the students using AI, 56% used it for help with writing assignments, and 45% for completing other types of schoolwork. The survey found many positive uses of AI as well, which we will return to, but, for now, let’s focus on the question of AI assistance on homework. Students don’t always see getting AI help as cheating (they are simply getting answers to some tricky problem or a challenging part of an essay), but many teachers do.

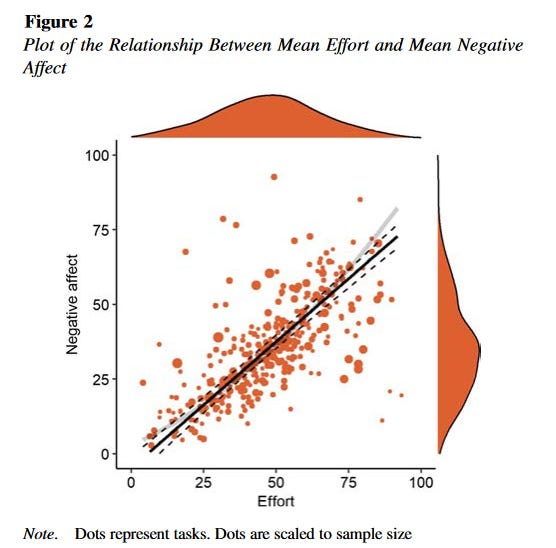

To be clear, AI is not the root cause of cheating. Cheating happens because schoolwork is hard and high stakes. And schoolwork is hard and high stakes because learning is not always fun and forms of extrinsic motivation, like grades, are often required to get people to learn. People are exquisitely good at figuring out ways to avoid things they don’t like to do, and, as a major new analysis shows, most people don’t like mental effort. So, they delegate some of that effort to the AI. In general, I am in favor of delegating tasks to AI (the subject of my new class on MasterClass), but education is different - the effort is the point.

This is not a new problem. One of the first uses of any new technology has always been to get help with homework. A study of thousands of students at Rutgers found that when they did their homework in 2008, it improved test grades for 86% of them (see, homework really does help!), but homework only helped 45% of students in 2017. Why? The rise of the Internet. By 2017, a majority of students were copying internet answers, rather than doing the work themselves.

The Homework Apocalypse has already happened and may even have happened before generative AI! Why are more people not seeing this as an emergency? I think it has to do with two illusions.

The Illusions

The first illusion is the Detection Illusion: teachers believe they can still easily detect AI use, and therefore can prevent it from being used in schoolwork. This Detection Illusion leads educators to rely on outdated assessment methods, believing they can easily spot AI-generated work when in reality, the technology has far surpassed our ability to consistently identify it:

No specialized AI detectors can detect AI writing with high accuracy and without the risk of false positives, especially after multiple rounds of prompting. Even watermarks won’t help much.

People can’t detect AI writing well. Editors at top linguistics journals couldn’t. Teachers couldn’t (though they thought they could - the Illusion again). While simple AI writing might be detectable (“delve,” anyone?), there are plenty of ways to disguise “AI writing” styles through simples prompting. In fact, well-prompted AI writing is judged more human than human writing by readers.

You can’t ask an AI to detect AI writing (even though people keep trying). When asked if something written by a human was written by an AI, GPT-4 gets it wrong 95% of the time.

There are still options that preserve old assignments. Teachers can return to in-class writing, asking students to demonstrate their skills in person, or other techniques that might mitigate AI cheating through close monitoring. But, for the vast majority of teachers, doing so requires adjustment and changes that have yet to be made. To date, few have actually reacted to the shattering of the illusion of AI detection by shifting how they approach teaching and assessment.

While teachers grapple with the Detection Illusion, students face their own misconception: Illusory Knowledge. They don’t actually realize that getting help with homework is undermining their learning. After all, they are getting advice and answers from the AI that help them solve problems, which feels like fluency. As the authors of the study at Rutgers wrote: “There is no reason to believe that the students are aware that their homework strategy lowers their exam score... they make the commonsense inference that any study strategy that raises their homework quiz score raises their exam score as well.”

The same thing appears to be happening with AI, as a study by some of my colleagues at Penn discovered. They conducted an experiment at a high school in Turkey where some students were given access to GPT-4 to help with homework, either through the standard ChatGPT interface (no prompt engineering) or using ChatGPT with a tutor prompt. Student homework scores shot up, but the use of unprompted standard ChatGPT to help with homework undermined learning by acting like a crutch. Even though students thought they learned a lot from using ChatGPT, they actually learned less - scoring 17% worse on their final exam.

Despite this, the survey I quoted earlier found that 59% of teachers see AI as positive for learning, and I don’t think they are wrong. While just using AI as a crutch can hurt learning, more careful use of AI is different. We can see signs of this in the Turkey study, which found that giving students a GPT with a basic tutor prompt for ChatGPT, instead of having them use ChatGPT on their own, boosted homework scores without lowering final exam grades. Plus, a study done in a massive programming class at Stanford that found use of ChatGPT led to increased, not decreased, exam grades.

And, of course, students are not using AI just to do their homework. They are getting aid in understanding complex topics, brainstorming ideas, refreshing their knowledge, creating new forms of creative work, getting feedback, getting advice, and so much more. Focusing just on the question of homework, and the illusions it fosters, can discourage us from making progress.

Encouraging, not replacing, thinking

To do so we need to center teachers in the process of using AI, rather than just leaving AI to students (or to those who dream of replacing teachers entirely). We know that almost three-quarters of teachers are already using AI for work, but we have just started to learn the most effective ways for teachers to use AI. A recent deep qualitative study of teachers found that teachers who used AI for both output (create a worksheet, develop a quiz) and to help with input (help me think through what makes a Great American novel, give me ways to explain positive and negative numbers) get more value than if they use AI for producing output alone. This points to a useful path forward in AI for education, using it as a co-intelligence and tool for helping humans do better thinking.

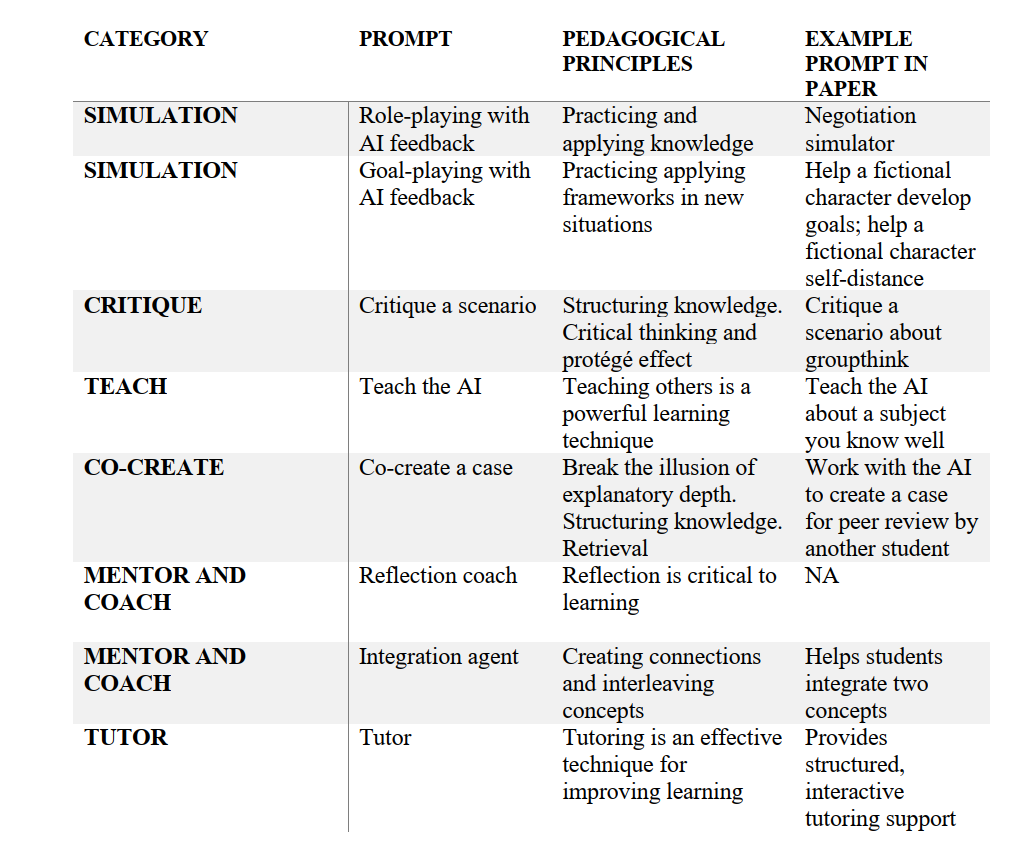

Increasingly, AI is being used in the same way for students, pushing them to think, rather than use AI as a crutch. For example, we have released multiple prompts, all under a free Creative Commons license, that instructors can customize or modify for their classrooms (here is deep dive into one of them - a simulator prompt). These sorts of prompts are designed to expose Illusory Knowledge, forcing students to confront what they know and don’t know. Many other educators are designing similar exercises. In doing so, we can take advantage of what makes AI so promising for teaching - its ability to produce customized learning experiences that meet students where they are, and which are broadly accessible in ways that past forms of educational technology never were.

The integration of AI in education is not a future possibility—it's our present reality. This shift demands more than passive acceptance or futile resistance. It requires a fundamental reimagining of how we teach, learn, and assess knowledge. As AI becomes an integral part of the educational landscape, our focus must evolve. The goal isn't to outsmart AI or to pretend it doesn't exist, but to harness its potential to enhance education while mitigating the downside. The question now is not whether AI will change education, but how we will shape that change to create a more effective, equitable, and engaging learning environment for all.

It is with great sadness that I find myself penning the hardest news post I’ve ever needed to write here at AnandTech. After over 27 years of covering the wide – and wild – word of computing hardware, today is AnandTech’s final day of publication.

For better or worse, we’ve reached the end of a long journey – one that started with a review of an AMD processor, and has ended with the review of an AMD processor. It’s fittingly poetic, but it is also a testament to the fact that we’ve spent the last 27 years doing what we love, covering the chips that are the lifeblood of the computing industry.

A lot of things have changed in the last quarter-century – in 1997 NVIDIA had yet to even coin the term “GPU” – and we’ve been fortunate to watch the world of hardware continue to evolve over the time period. We’ve gone from boxy desktop computers and laptops that today we’d charitably classify as portable desktops, to pocket computers where even the cheapest budget device puts the fastest PC of 1997 to shame.

The years have also brought some monumental changes to the world of publishing. AnandTech was hardly the first hardware enthusiast website, nor will we be the last. But we were fortunate to thrive in the past couple of decades, when so many of our peers did not, thanks to a combination of hard work, strategic investments in people and products, even more hard work, and the support of our many friends, colleagues, and readers.

Still, few things last forever, and the market for written tech journalism is not what it once was – nor will it ever be again. So, the time has come for AnandTech to wrap up its work, and let the next generation of tech journalists take their place within the zeitgeist.

It has been my immense privilege to write for AnandTech for the past 19 years – and to manage it as its editor-in-chief for the past decade. And while I carry more than a bit of remorse in being AnandTech’s final boss, I can at least take pride in everything we’ve accomplished over the years, whether it’s lauding some legendary products, writing technology primers that still remain relevant today, or watching new stars rise in expected places. There is still more that I had wanted AnandTech to do, but after 21,500 articles, this was a good start.

And while the AnandTech staff is riding off into the sunset, I am happy to report that the site itself won’t be going anywhere for a while. Our publisher, Future PLC, will be keeping the AnandTech website and its many articles live indefinitely. So that all of the content we’ve created over the years remains accessible and citable. Even without new articles to add to the collection, I expect that many of the things we’ve written over the past couple of decades will remain relevant for years to come – and remain accessible just as long.

The AnandTech Forums will also continue to be operated by Future’s community team and our dedicated troop of moderators. With forum threads going back to 1999 (and some active members just as long), the forums have a history almost as long and as storied as AnandTech itself (wounded monitor children, anyone?). So even when AnandTech is no longer publishing articles, we’ll still have a place for everyone to talk about the latest in technology – and have those discussions last longer than 48 hours.

Finally, for everyone who still needs their technical writing fix, our formidable opposition of the last 27 years and fellow Future brand, Tom’s Hardware, is continuing to cover the world of technology. There are a couple of familiar AnandTech faces already over there providing their accumulated expertise, and the site will continue doing its best to provide a written take on technology news.

So Many Thank Yous

As I look back on everything AnandTech has accomplished over the past 27 years, there are more than a few people, groups, and companies that I would like to thank on behalf of both myself and AnandTech as a whole.

First and foremost, I cannot thank enough all the editors who have worked for AnandTech over the years. There are far more of you than I can ever name, but AnandTech’s editors have been the lifeblood of the site, bringing over their expertise and passion to craft the kind of deep, investigative articles that AnandTech is best known for. These are the finest people I’ve ever had the opportunity to work with, and it shouldn’t come as any surprise that these people have become even bigger successes in their respective fields. Whether it’s hardware and software development, consulting and business analysis, or even launching rockets into space, they’ve all been rock stars whom I’ve been fortunate to work with over the past couple of decades.

Ian Cutress, Anton Shilov, and Gavin Bonshor at Computex 2019

And a special shout out to the final class of AnandTech editors, who have been with us until the end, providing the final articles that grace this site. Gavin Bonshor, Ganesh TS, E. Fylladitakis, and Anton Shilov have all gone above and beyond to meet impossible deadlines and go half-way around the world to report on the latest in technology.

Of course, none of this would have been possible without the man himself, Anand Lal Shimpi, who started this site out of his bedroom 27 years ago. While Anand retired from the world of tech journalism a decade ago, the standard he set for quality and the lessons he taught all of us have continued to resonate within AnandTech to this very day. And while it would be tautological to say that there would be no AnandTech without Anand, it’s none the less true – the mark on the tech publishing industry that we’ve been able to make all started with him.

MWC 2014: Ian Cutress, Anand Lal Shimpi, Joshua Ho

I also want to thank the many, many hardware and software companies we’ve worked with over the years. More than just providing us review samples and technical support, we’ve been given unique access to some of the greatest engineers in the industry. People who have built some of the most complex chips ever made, and casually forgotten more about the subject than we as tech journalists will ever know. So being able to ask those minds stupid questions, and seeing the gears turn in their heads as they explain their ideas, innovations, and thought processes has been nothing short of an incredible learning experience. We haven’t always (or even often) seen eye-to-eye on matters with all of the companies we've covered, but as the last 27 years have shown, sharing the amazing advancements behind the latest technologies has benefited everyone, consumers and companies alike.

Thank yous are also due to AnandTech’s publishers over the years – Future PLC, and Purch before them. AnandTech’s publishers have given us an incredible degree of latitude to do things the AnandTech way, even when it meant taking big risks or not following the latest trend. A more cynical and controlling publisher could have undoubtedly found ways to make more money from the AnandTech website, but the resulting content would not have been AnandTech. We’ve enjoyed complete editorial freedom up to our final day, and that’s not something so many other websites have had the luxury to experience. And for that I am thankful.

CES 2016: Ian Cutress, Ganesh TS, Joshua Ho, Brett Howse, Brandon Chester, Billy Tallis

Finally, I cannot thank our many readers enough. Whether you’ve been following AnandTech since 1997 or you’ve just recently discovered us, everything we’ve published here we’ve done for you. To show you what amazing things were going on in the world of technology, the radical innovations driving the next generation of products, or a sober review that reminds us all that there’s (almost) no such thing as bad products, just bad pricing. Our readers have kept us on our toes, pushing us to do better, and holding us responsible when we’ve strayed from our responsibilities.

Ultimately, a website is only as influential as its readers, otherwise we would be screaming into the void that is the Internet. For all the credit we can claim as writers, all of that pales in comparison to our readers who have enjoyed our content, referenced it, and shared it with the world. So from the bottom of my heart, thank you for sticking with us for the past 27 years.

Continuing the Fight Against the Cable TV-ification of the Web

Finally, I’d like to end this piece with a comment on the Cable TV-ification of the web. A core belief that Anand and I have held dear for years, and is still on our About page to this day, is AnandTech’s rebuke of sensationalism, link baiting, and the path to shallow 10-o'clock-news reporting. It has been our mission over the past 27 years to inform and educate our readers by providing high-quality content – and while we’re no longer going to be able to fulfill that role, the need for quality, in-depth reporting has not changed. If anything, the need has increased as social media and changing advertising landscapes have made shallow, sensationalistic reporting all the more lucrative.

Speaking of TV: Anand Hosting The AGN Hardware Show (June 1998)

For all the tech journalists out there right now – or tech journalists to be – I implore you to remain true to yourself, and to your readers' needs. In-depth reporting isn’t always as sexy or as exciting as other avenues, but now, more than ever, it’s necessary to counter sensationalism and cynicism with high-quality reporting and testing that is used to support thoughtful conclusions. To quote Anand: “I don't believe the web needs to be academic reporting or sensationalist garbage - as long as there's a balance, I'm happy.”

Signing Off One Last Time

Wrapping things up, it has been my privilege over the last 19 years to write for one of the most impactful tech news websites that has ever existed. And while I’m heartbroken that we’re at the end of AnandTech’s 27-year journey, I can take solace in everything we’ve been able to accomplish over the years. All of which has been made possible thanks to our industry partners and our awesome readers.

On a personal note, this has been my dream job; to say I’ve been fortunate would be an understatement. And while I’ll no longer be the editor-in-chief of AnandTech, I’m far from being done with technology as a whole. I’ll still be around on Twitter/X, and we’ll see where my own journey takes me next.

To everyone who has followed AnandTech over the years, fans, foes, readers, competitors, academics, engineers, and just the technologically curious who want to learn a bit more about their favorite hardware, thank you for all of your patronage over the years. We could not have accomplished this without your support.

-Thanks,

Ryan Smith

Ars Technica has a good article on what’s happening in the world of television surveillance. More than even I realized.

by Vasilis van Gemert published on

Recently when I gave a coding assignment — an art directed web page about a font — a student asked: does it have to be semantic and shit? The whole class looked up, curious about the answer — please let it be no! I answered that no, it doesn’t have to be semantic and shit, but it does have to be well designed and the user experience should be well considered. Relieved, all of my students agreed. They do care about a good user experience.

The joke here is, of course, that a well considered user experience starts with well considered HTML.

Somehow my students are allergic to semantics and shit. And they’re not alone. If you look at 99% of all websites in the wild, everybody who worked on them seems to be allergic to semantics and shit. On most websites heading levels are just random numbers, loosely based on font-size. Form fields have no labels. Links and buttons are divs. It’s really pretty bad. So it’s not just my students, the whole industry doesn’t understand semantics and shit.

Recently I decided to stop using the word semantics. Instead I talk about the UX of HTML. And all of a sudden my students are not allergic to HTML anymore but really interested. Instead of explaining the meaning of a certain element, I show them what it does. So we look at what happens when you add a label to an input: The input and the label now form a pair. You can now click on the label to interact with a checkbox. The label will be read out loud when you focus on an input with a screenreader. When you hover over a label, the hover state of the connected input is shown. My students love stuff like that. They care about UX.

I show them that a span with an onclick-event might seem to be behaving like a link, but that there are many layers of UX missing when you look a bit closer: right-click on this span, and a generic context menu opens up. When on the other hand you right-click on a proper link like this one a specialised context menu opens, with all kinds of options that are specific to a link. And I show them that proper links show up when you ask your screen reader to list all the links on a page, yet spans with an onclick-event don’t. Moreover, a span doesn’t receive focus when you tab to it. And so on. My students see this and they get it. And they love the fact that by being lazy they get much more result.

So when I teach about HTML I always start with the elements that are obviously interactive. I show them the multitude of UX layers of a link, I show them the layers and layers of UX that are added to a well considered form. I show them what happens on a phone when you use an input with a default text type instead of the proper type of email. They get this, and they want to know more. So I show them more. I show them the required attribute which makes it possible to validate not only form fields, but also fieldsets and forms. They love this stuff.

Then I go on and show the details element with a summary. Whoah! No JavaScript!

These interactive things are the most important parts of HTML. These are the things that truly break or make a site. If you don’t use these elements properly, many people are actively excluded and the UX degrades in many ways. The interactive elements are what makes the web the web.

The old focus on semantics

Every time I come home from a web conference I hang the lanyard with the badge on a doorknob. There are at least 35 lanyards there. The oldest is more than fifteen years old, the newest just a few months. On most of these conferences there was someone telling us about the importance of using proper semantic HTML. And they all talked about the importance of heading levels. So for at least 15 years we’ve been telling the web design and web development community that heading levels are very important. Yet after all these years, and after all these conferences there are almost no websites that do it right.

Later, when HTML5 was introduced the talks about semantics were still mostly about creating a proper document outline, but this time with sectioning elements in combination with headings. Unfortunately this idea was never properly implemented by browsers. And it turned out that these sectioning elements are very hard to understand. For years I’ve tried to explain the difference between a section and an article, and almost nobody gets it. And of course they don’t, because it’s very, very complicated theory. And above all: There’s no clear UX feature to point at. The user experience doesn’t change dramatically if you use a section instead of an article. It’s mostly theoretical. So nowadays, next to divitis, we also have sectionitis.

Understanding how heading levels work is hard. And understanding how sectioning elements work is really hard. And explaining these things is even harder. What’s the use for theoretical purity if the end result is the same?

Yes, I know that some sectioning elements actually have some UX attached to it. But not that much UX if you compare it to the real interactive elements. Not getting your heading levels right is not at all as destructive as using divs instead of links.

Now, I’m not saying that we should stop teaching people about heading levels and sections. We shouldn’t. Heading levels and sections do things as well. But we should think about when we teach these more complicated and subtle parts of HTML. First we need to get people exited about HTML by showing all the free yet complex layers of UX you get when you use the interactive elements properly. And then, when they do understand the interactive elements, when they’re really excited and they ask for more, show them the more obscure UX patterns. You need a good idea of what UX is before you can understand things like the option to nagivate through the headings on the page with a screen reader. Without an idea of what UX means you cannot understand what landmarks do. First start with the obvious, then show the details.

I am very much looking forward to 15 more years of web conferences and publications. I look forward to seeing inspiring talks about the UX of HTML. Talks about the incredible radio button and its wonderful indeterminate state! Talks about validating forms in a friendly way with just HTML and some clever CSS. Talks about the different context menus that appear on different elements. In other words, talks about what HTML does, and much less about what it means in theory. Let’s talk about user experience, and let’s stop talking about semantics and shit.

About Vasilis van Gemert

Vasilis is a lecturer at the Amsterdam University of Applied Sciences. Here he teaches the next generation of digital product designers about the web. He believes that universities should research important topics that “the industry” tends to ignore. That’s why he teaches about desiging for accessibility (and about CSS, which can use more love as well).

Website: vasilis.nl

More articles

The highest signal-to-noise software engineering interview I’ve seen goes like this:

Here’s a repo you’ve never seen before. Here’s how to build and run the tests in this repo. There’s a bug: what we’re observing is X, but we see Y instead. Find the bug and maybe even write some code to fix it.

I’ve only ever heard it called a “bug squash” interview, but if it goes by other names I’d love to learn them (if only to hear about more companies that use this interview style).

There’s a lot of reasons why I like this interview:

It reflects everyday software development. Have I ever been paid to write a function that checks if a word is a palindrome? No. Have I had to dive into foreign code (or maybe just: “code foreign to me”) to unbreak something? Literally every day.

It’s fun. It’s fun in the same way an escape room is fun. It’s fun because of the dopamine you get when the test suite blinks green. It’s fun because fixing self-contained, reproducible bugs like this is what so many of us enjoy the most about software engineering in the first place.

It’s easy for the candidate to self-assess their own progress.I’ve sometimes heard this reduced to “it’s fair.” At the very least, maybe this is a necessary condition for a fair interview, though I’m unclear whether it’s sufficient.

When the candidate isn’t doing well on it, they probably already knew it without needing to be told as much by the recruiter. This is a much better candidate experience than the whiplash of thinking you solved a question perfectly only to realize that the interviewer was looking for something else entirely.

It’s especially useful in certain kinds of high-growth companies. If the median tenure of software engineers at a company is low,2 years? 1 year? God forbid… 6 months?

there’s going to be a huge mass of code that the majority of engineers are seeing for the first time. In these companies, foreign code is almost the norm, not the exception!

It gives experienced candidates a moment in the spotlight. I’ve seen candidates drop into

straceto debug a Ruby program. I’ve seen candidates fire upmitmproxyto debug a Python web server. I’ve seen candidates wield their text editor like it was an extension of their fingertips. When someone is in complete command of their tools, this interview showcases that better than any other interview.Cheating effectively is indistinguishable from debugging skill. Even with knowledge of the exact bug ahead of time, if you just open the file with the bug, barf out the code to fix it, and run the tests, you’re going to fail the interview.

Passing involves convincing the interviewer that the technique used to find the bug is repeatable and somewhat “scientific.” Is the candidate making hypotheses about how the codebase works and testing them? Does the candidate have good tools or techniques for finding code and navigating a codebase? Is the candidate building up a mental model of the codebase as a whole, or just blindly tracing code line by line, hoping the bug eventually falls under their gaze?

Even if you only did these things because someone slipped you a prepared, hour-long script to recite, congrats: you just cheated your way into learning how to debug. That’s a useful skill!

It recognizes how much time in software development is spent reading and understanding existing code vs writing net new code. (Hint: even in the most product-heavy, greenfield teams, it’s not zero!)

As a result, it doesn’t require “practicing how to interview” the same way that leetcode questions do. Candidates who are good at this interview are good at it because it’s a skill they’ve practiced day in and day out their entire career.

It’s my favorite interview to give by far.

Here’s the problems with it:

Writing these interview questions takes time. The bug needs to fit some sort of sweet spot between “too hard to make meaningful progress on in an hour” and “so trivial it takes 5 minutes.” It’s not going to be obvious whether a bug lies in that sweet spot without calibration testing.

You’re probably going to want more than one bug per project. Some candidates will find the bug in 10-15 minutes naturally, but you’re going to want to keep using the remaining ~40 minutes digging in to expose their full ability: you want to know when you’ve found a strong passing candidate vs an average passing candidate. Finding more of these already-rare “sweet spot” bugs is more work.

You’ll need at least one question per language that you want to allow people to interview in. You’ll probably start with a few like JavaScript, Python, Java, and C++, but you probably want to extend that to C#, Objective-C, Ruby, Go, Rust, etc. If you ask candidates to choose and they’re forced to choose a language whose debugging tools they’re unfamiliar with, you’ll get less signal. “Was this performance poor because they’re bad at debugging, or just unfamiliar with this language’s tools?”

Similarly, unless the language as a whole makes it prohibitively difficult to develop on certain operating systems, you’re going to want to make sure that the project in question has multi-platform support (basically: you’ll want projects which build and pass tests on Windows absent WSL).

The codebase has to be relatively easy to build and test. Candidates are going to show up to interview with the personal laptop that they last used 10 years ago in college and never needed to replace because work always gave them a new one every 2 years. It might take you 10 seconds to build and test that project, but on their 2013 MacBook Air with Zoom running in the background, their dev loop is easily 10-20x longer.

For companies that do on-site interview loops (rare post-pandemic), it’s possible to maintain a pool of interview loaner laptops to bail out candidates like this, but this also means needing to pre-install most of the tools (IDEs, terminal emulators, text editors, etc.) that candidates are likely to want, and replicating that across macOS, Windows, and Linux.

It’s requires upkeep, which is rare for most interviews. The snapshot of the project at a point in time will bitrot as new language, dependency, and operating system versions are released. In practice it’s not usually too bad, because if the question bitrots, chances are the same problem is affecting the repo the snapshot was taken from.

It depends on the candidate being able to install the dependencies of the project on their laptop (including any system or language-level dependencies). In practice, this also isn’t too bad, as you can provide candidates a mostly-empty “before your interview” sandbox repo which mimics the build and test setup for the target repo so they can arrive to the interview with a working development environment.

I’d love to see this kind of interview used more widely.

It’s not the end all, be all of interviews. A candidate that passes the bug squash interview is not instantly hired.

Rather, the bug squash interview is part of a balanced

breakfast interview loop—it shines alongside classic

interview types like one or two leetcode questions, an “experience and

goals” interview, maybe a software architecture interview. In that

setting: the bug squash interview tests a skill rarely covered by

existing interview loops, yet is extremely common in everyday software

engineering.

And honestly: it’s plain fun.