A hint to the future arrived quietly over the weekend. For a long time, I've been discussing two parallel revolutions in AI: the rise of autonomous agents and the emergence of powerful Reasoners since OpenAI's o1 was launched. These two threads have finally converged into something really impressive - AI systems that can conduct research with the depth and nuance of human experts, but at machine speed. OpenAI's Deep Research demonstrates this convergence and gives us a sense of what the future might be. But to understand why this matters, we need to start with the building blocks: Reasoners and agents.

Reasoners

For the past couple years, whenever you used a chatbot, it worked in a simple way: you typed something in, and it immediately started responding word by word (or more technically, token by token). The AI could only "think" while producing these tokens, so researchers developed tricks to improve its reasoning - like telling it to "think step by step before answering." This approach, called chain-of-thought prompting, markedly improved AI performance.

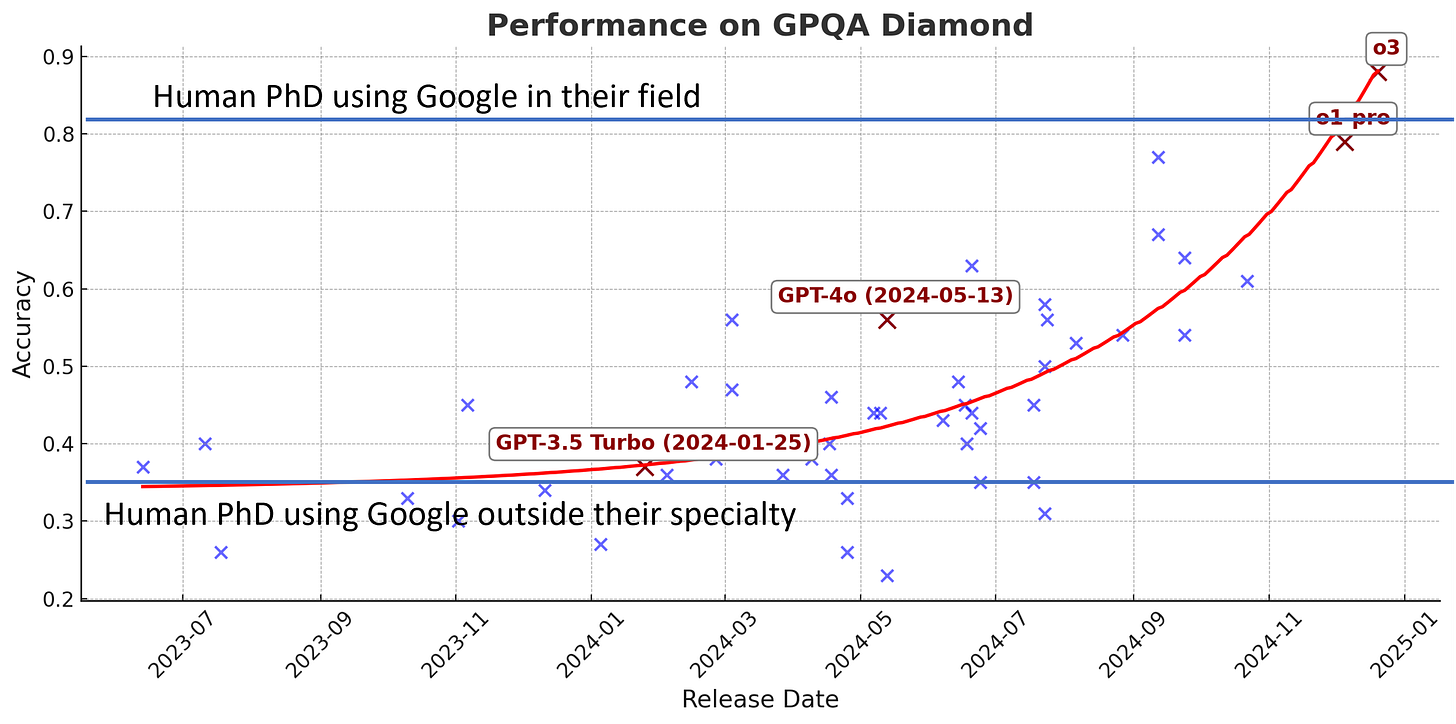

Reasoners essentially automate the process, producing “thinking tokens” before actually giving you an answer. This was a breakthrough in at least two important ways. First, because the AI companies could now get AIs to learn how to reason based on examples of really good problem-solvers, the AI can “think” more effectively. This training process can produce a higher quality chain-of-thought than we can by prompting. This means Reasoners are capable of solving much harder problems, especially in areas like math or logic where older chatbots failed.

The second way this was a breakthrough is that it turns out that the longer Reasoners “think,” the better their answers get (though the rate of improvement slows as they think longer). This is a big deal because previously the only way to make AIs perform better was to train bigger and bigger models, which is very expensive and requires a lot of data. Reasoning models show you can make AIs better by just letting them produce more and more thinking tokens, using computing power at the time of answering your question (called inference-time compute) rather than when the model was trained.

Because Reasoners are so new, their capabilities are expanding rapidly. In just months, we've seen dramatic improvements from OpenAI's o1 family to their new o3 models. Meanwhile, China's DeepSeek r1 has found innovative ways to boost performance while cutting costs, and Google has launched their first Reasoner. This is just the beginning - expect to see more of these powerful systems, and soon.

Agents

While experts debate the precise definition of an AI agent, we can think of it simply as “an AI that is given a goal and can pursue that goal autonomously.” Right now, there's an AI labs arms race to build general-purpose agents - systems that can handle any task you throw at them. I've written about some early examples like Devin and Claude with Computer Use, but OpenAI just released Operator, perhaps the most polished general-purpose agent yet.

The video below, sped up 16x, captures both the promise and pitfalls of general-purpose agents. I give Operator a task: read my latest substack post at OneUsefulThing and then go onto Google ImageFX and make an appropriate image, download it, and give it to me to post. What unfolds is enlightening. At first, Operator moves with impressive precision - finding my website, reading the post, navigating to ImageFX (pausing briefly for me to enter my login), and creating the image. Then the troubles begin, and they're twofold: not only is Operator blocked by OpenAI's security restrictions on file downloads, but it also starts to struggle with the task itself. The agent methodically tries every conceivable workaround: copying to clipboard, generating direct links, even diving into the site's source code. Each attempt fails - some due to OpenAI's browser restrictions, others due to the agent's own confusion about how to actually accomplish the task. Watching this determined but ultimately failed problem-solving loop reveals both the current limitations of these systems and raises questions about how agents will eventually behave when they encounter barriers in the real world.

Operator's issues highlight the current limits of general-purpose agents, but that doesn’t suggest that agents are useless. It appears that economically valuable narrow agents that focus on specific tasks are already possible. These specialists, powered by current LLM technology, can achieve remarkable results within their domains. Case in point: OpenAI's new Deep Research, which shows just how powerful a focused AI agent can be.

Deep Research

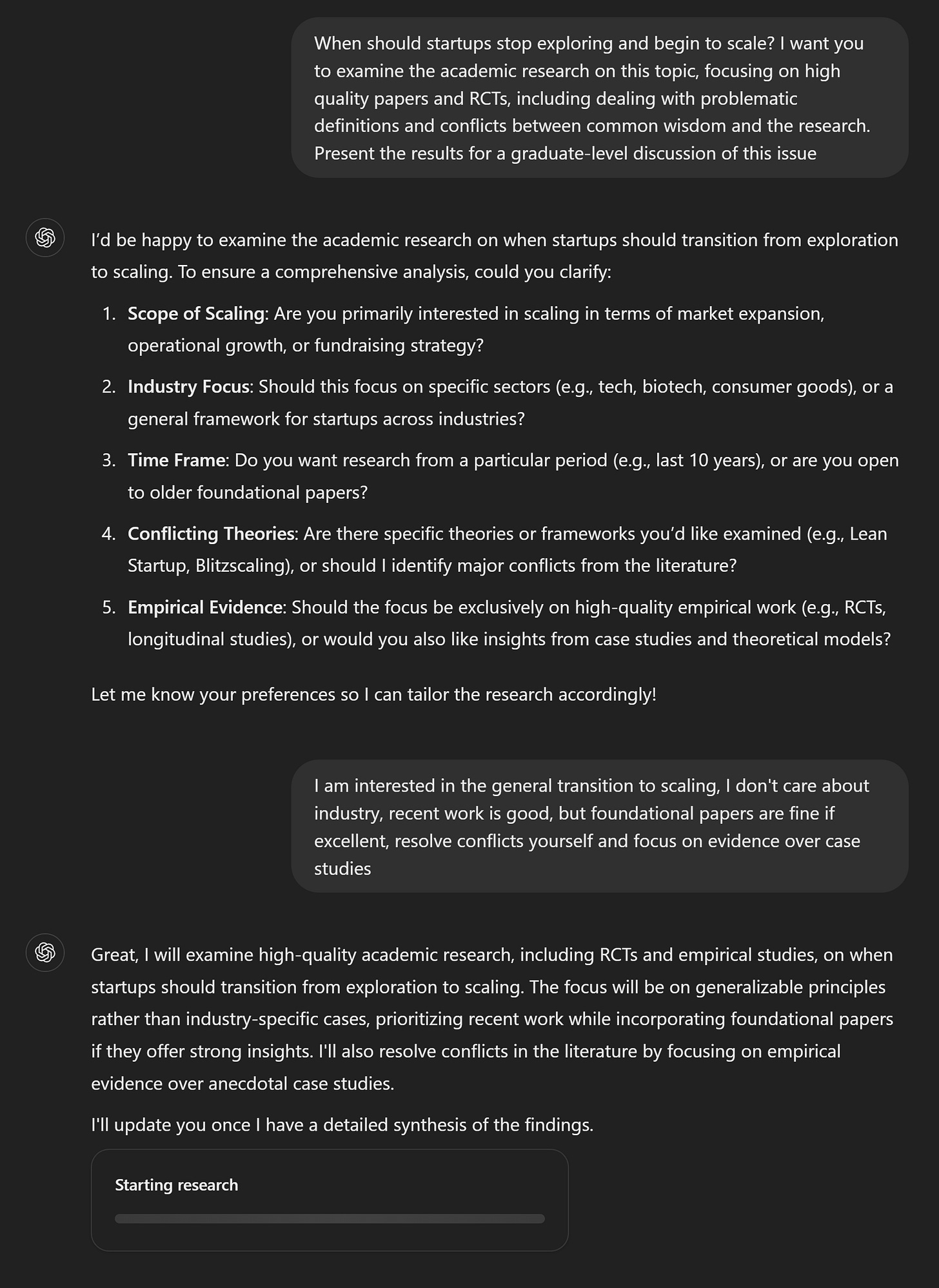

OpenAI’s Deep Research (not to be confused with Google’s Deep Research, more on that soon) is essentially a narrow research agent, built on OpenAI’s still unreleased o3 Reasoner, and with access to special tools and capabilities. It is one of the more impressive AI applications I have seen recently. To understand why, let’s give it a topic. I am specifically going to pick a highly technical and controversial issue within my field of research: When should startups stop exploring and begin to scale? I want you to examine the academic research on this topic, focusing on high quality papers and RCTs, including dealing with problematic definitions and conflicts between common wisdom and the research. Present the results for a graduate-level discussion of this issue.

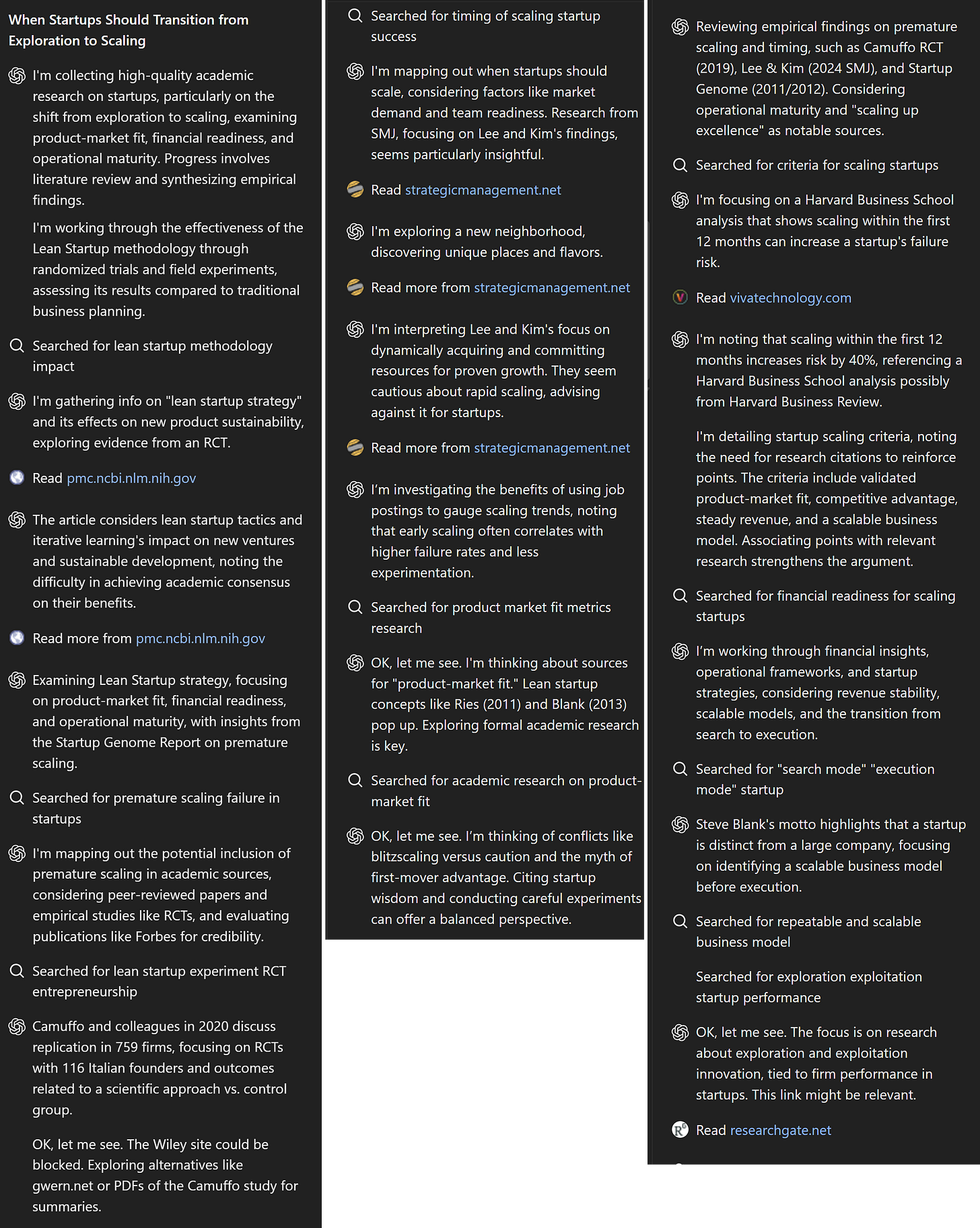

The AI asks some smart questions, and I clarify what I want. Now o3 goes off and gets to work. You can see its progress and “thinking” as it goes. It is really worth taking a second to look at a couple samples of that process below. You can see that the AI is actually working as a researcher, exploring findings, digging deeper into things that “interest” it, and solving problems (like finding alternative ways of getting access to paywalled articles). This goes on for five minutes.

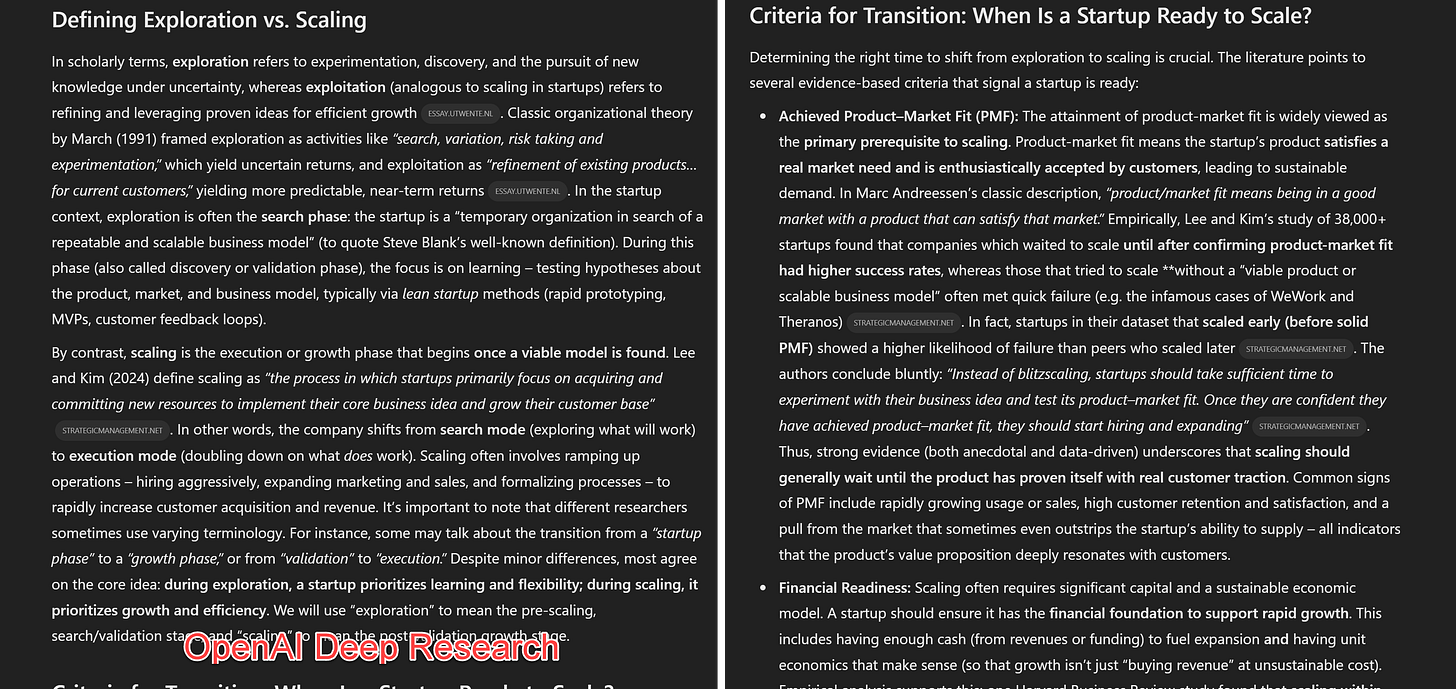

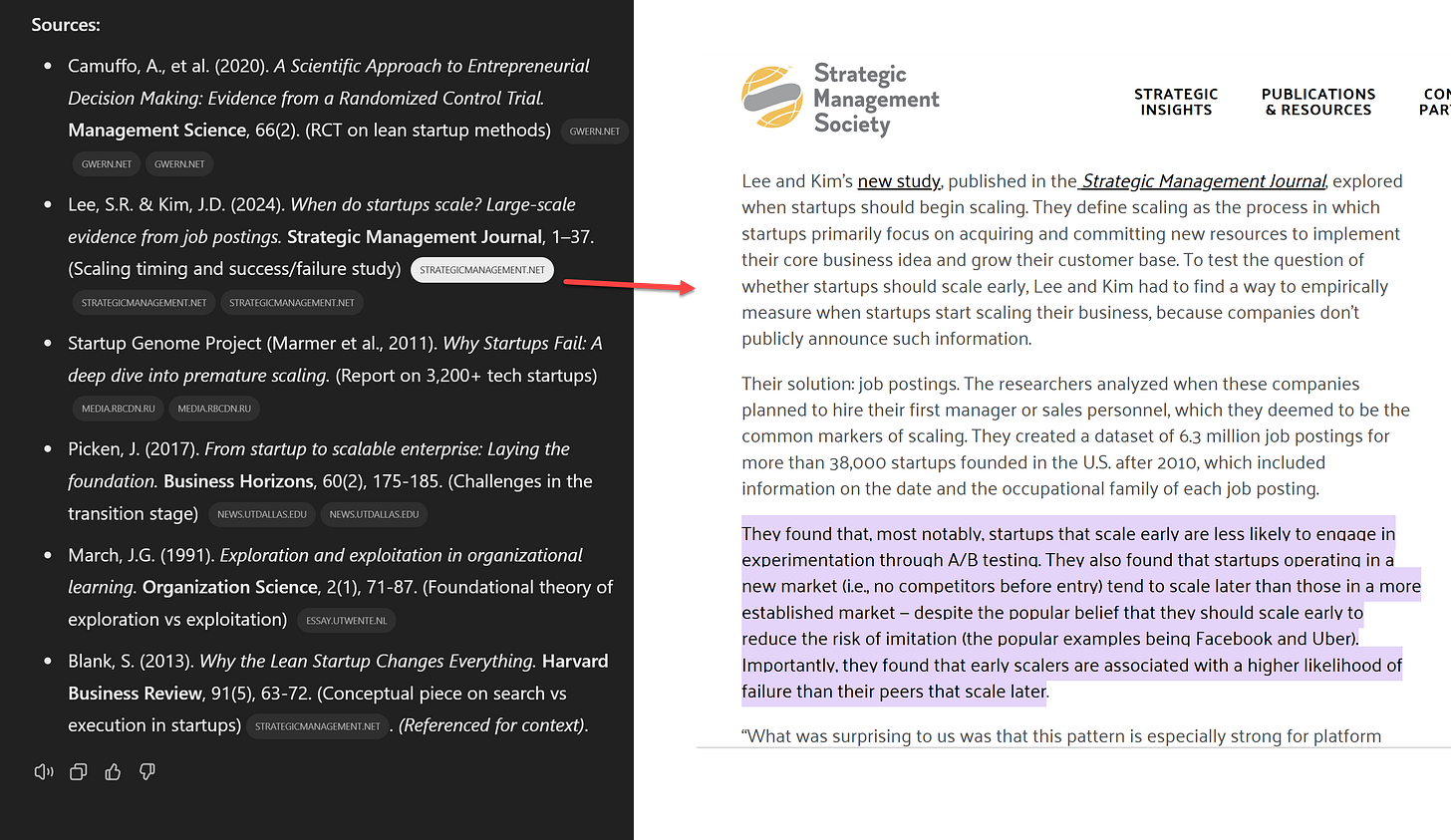

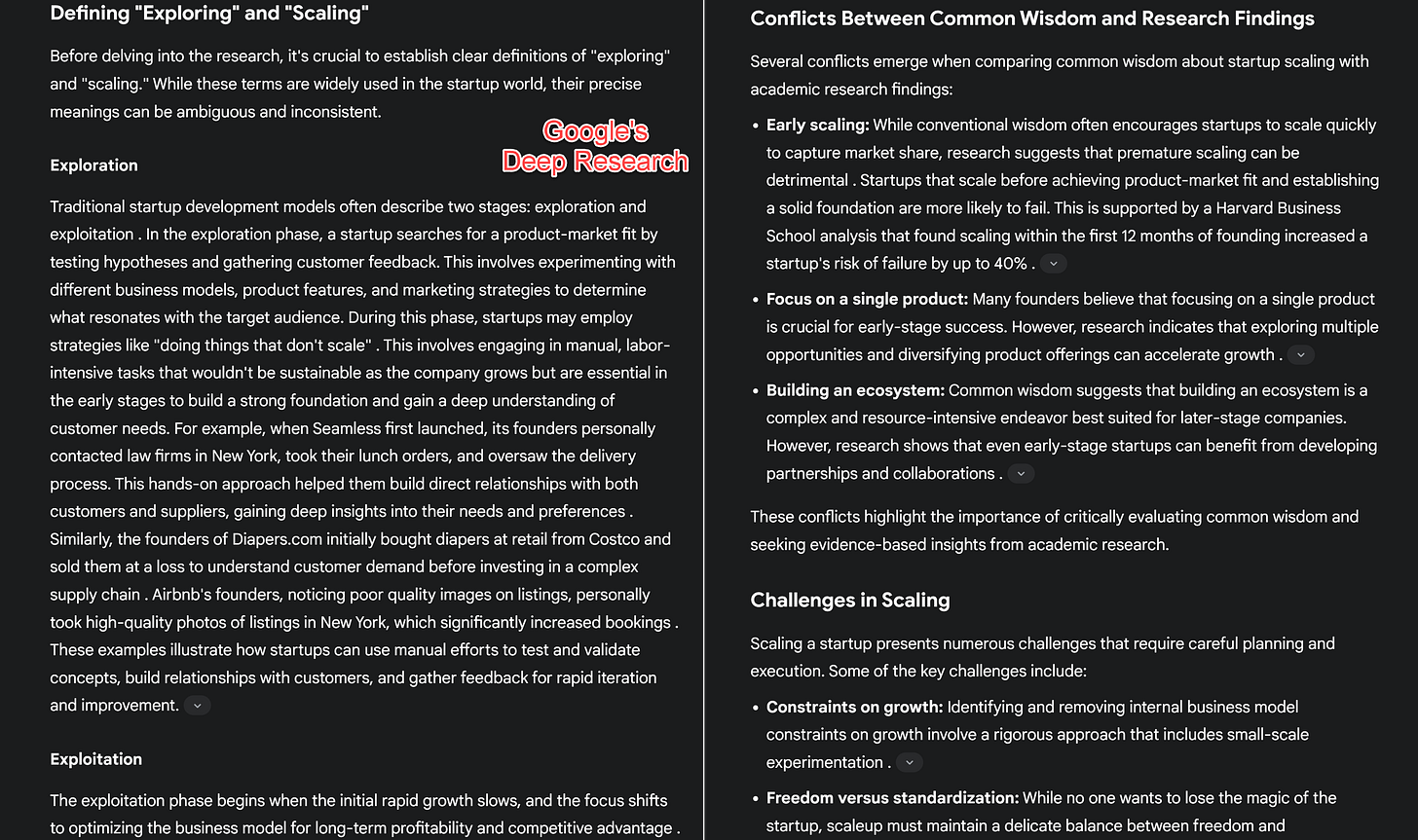

At the end, I get a 13 page, 3,778 word draft with six citations and a few additional references. It is, honestly, very good, even if I would have liked a few more sources. It wove together difficult and contradictory concepts, found some novel connections I wouldn’t expect, cited only high-quality sources, and was full of accurate quotations. I cannot guarantee everything is correct (though I did not see any errors) but I would have been satisfied to see something like it from a beginning PhD student. You can see the full results here but the couple excerpts below would suffice to show you why I am so impressed.

The quality of citations also marks a genuine advance here. These aren't the usual AI hallucinations or misquoted papers - they're legitimate, high-quality academic sources, including seminal work by my colleagues Saerom (Ronnie) Lee and Daniel Kim. When I click the links, they don't just lead to the papers, they often take me directly to the relevant highlighted quotes. While there are still constraints - the AI can only access what it can find and read in a few minutes, and paywalled articles remain out of reach - this represents a fundamental shift in how AI can engage with academic literature. For the first time, an AI isn't just summarizing research, it's actively engaging with it at a level that actually approaches human scholarly work.

It is worth contrasting it with Google’s product launched last month also called Deep Research (sigh). Google surfaces far more citations, but they are often a mix of websites of varying quality (the lack of access to paywalled information and books hurts all of these agents). It appears to gather documents all at once, as opposed to the curiosity-driven discovery of OpenAI’s researcher agent. And, because (as of now) this is powered by the non-reasoning, older Gemini 1.5 model, the overall summary is much more surface-level, though still solid and apparently error-free. It is like a very good undergraduate product. I suspect that the difference will be clear if you read a little bit below.

To put this in perspective: both outputs represent work that would typically consume hours of human effort - near PhD-level analysis from OpenAI's system, solid undergraduate work from Google's. OpenAI makes some bold claims in their announcement, complete with graphs suggesting their agent can handle 15% of high economic value research projects and 9% of very high value ones. While these numbers deserve skepticism - their methodology isn't explained - my hands-on testing suggests they're not entirely off base. Deep Research can indeed produce valuable, sophisticated analysis in minutes rather than hours. And given the rapid pace of development, I expect Google won't let this capability gap persist for long. We are likely to see fast improvement in research agents in the coming months.

The pieces come together

You can start to see how the pieces that the AI labs are building aren't just fitting together - they're playing off each other. The Reasoners provide the intellectual horsepower, while the agentic systems provide the ability to act. Right now, we're in the era of narrow agents like Deep Research, because even our best Reasoners aren't ready for general-purpose autonomy. But narrow isn’t limiting - these systems are already capable of performing work that once required teams of highly-paid experts or specialized consultancies.

These experts and consultancies aren't going away - if anything, their judgment becomes more crucial as they evolve from doing the work to orchestrating and validating the work of AI systems. But the labs believe this is just the beginning. They're betting that better models will crack the code of general-purpose agents, expanding beyond narrow tasks to become autonomous digital workers that can navigate the web, process information across all modalities, and take meaningful action in the world. Operator shows we aren’t there yet, but Deep Research suggests that we may be on our way.