The last month has transformed the state of AI, with the pace picking up dramatically in just the last week. AI labs have unleashed a flood of new products - some revolutionary, others incremental - making it hard for anyone to keep up. Several of these changes are, I believe, genuine breakthroughs that will reshape AI's (and maybe our) future. Here is where we now stand:

Smart AIs are now everywhere

At the end of last year, there was only one publicly available GPT-4/Gen2 class model, and that was GPT-4. Now there are between six and ten such models, and some of them are open weights, which means they are free for anyone to use or modify. From the US we have OpenAI’s GPT-4o, Anthropic’s Claude Sonnet 3.5, Google’s Gemini 1.5, the open Llama 3.2 from Meta, Elon Musk’s Grok 2, and Amazon’s new Nova. Chinese companies have released three open multi-lingual models that appear to have GPT-4 class performance, notably Alibaba’s Qwen, R1’s DeepSeek, and 01.ai’s Yi. Europe has a lone entrant in the space, France’s Mistral. What this word salad of confusing names means is that building capable AIs did not involve some magical formula only OpenAI had, but was available to companies with computer science talent and the ability to get the chips and power needed to train a model.

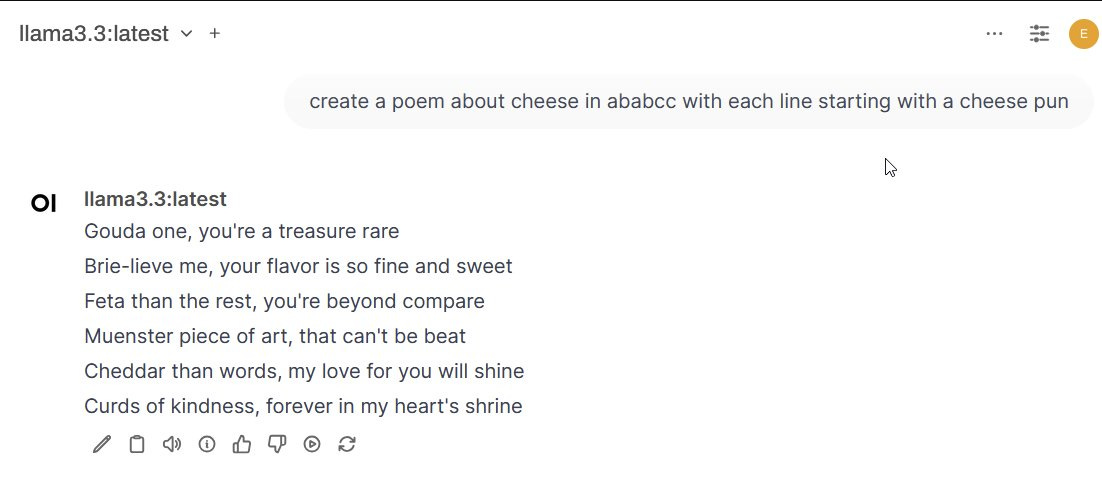

In fact, GPT-4 level artificial intelligence, so startling when it was released that it led to considerable anxiety about the future, can now be run on my home computer. Meta’s newest small model, released this month, named Llama 3.3, offers similar performance and can operate entirely offline on my gaming PC. And the new, tiny Phi 4 from Microsoft is GPT-4 level and can almost run on your phone, while its slightly less capable predecessor, Phi 3.5, certainly can. Intelligence, of a sort, is available on demand.

And, as I have discussed (and will post about again soon), these ubiquitous AIs are now starting to power agents, autonomous AIs that can pursue their own goals. You can see what that means in this post, where I use early agents to do comparison shopping and monitor a construction site.

VERY smart AIs are now here

All of this means that if GPT-4 level performance was the maximum an AI could achieve, that would likely be enough for us to have five to ten years of continued change as we got used to their capabilities. But there isn’t a sign that a major slowdown in AI development is imminent. We know this because the last month has had two other significant releases - the first sign of the Gen3 models (you can think of these as GPT-5 class models) and the release of the o1 models that can “think” before answering, effectively making them much better reasoners than other LLMs. We are in the early days of Gen3 releases, so I am not going to write about them too much in this post, but I do want to talk about o1.

I discussed the o1 release when it came out in early o1-preview form, but two more sophisticated variants, o1 and o1-pro, have considerably increased power. These models spend time invisibly “thinking” - mimicking human logical problem solving - before answering questions. This approach, called test time compute, turns out to be a key to making models better at problem solving. In fact, these models are now smart enough to make meaningful contributions to research, in ways big and small.

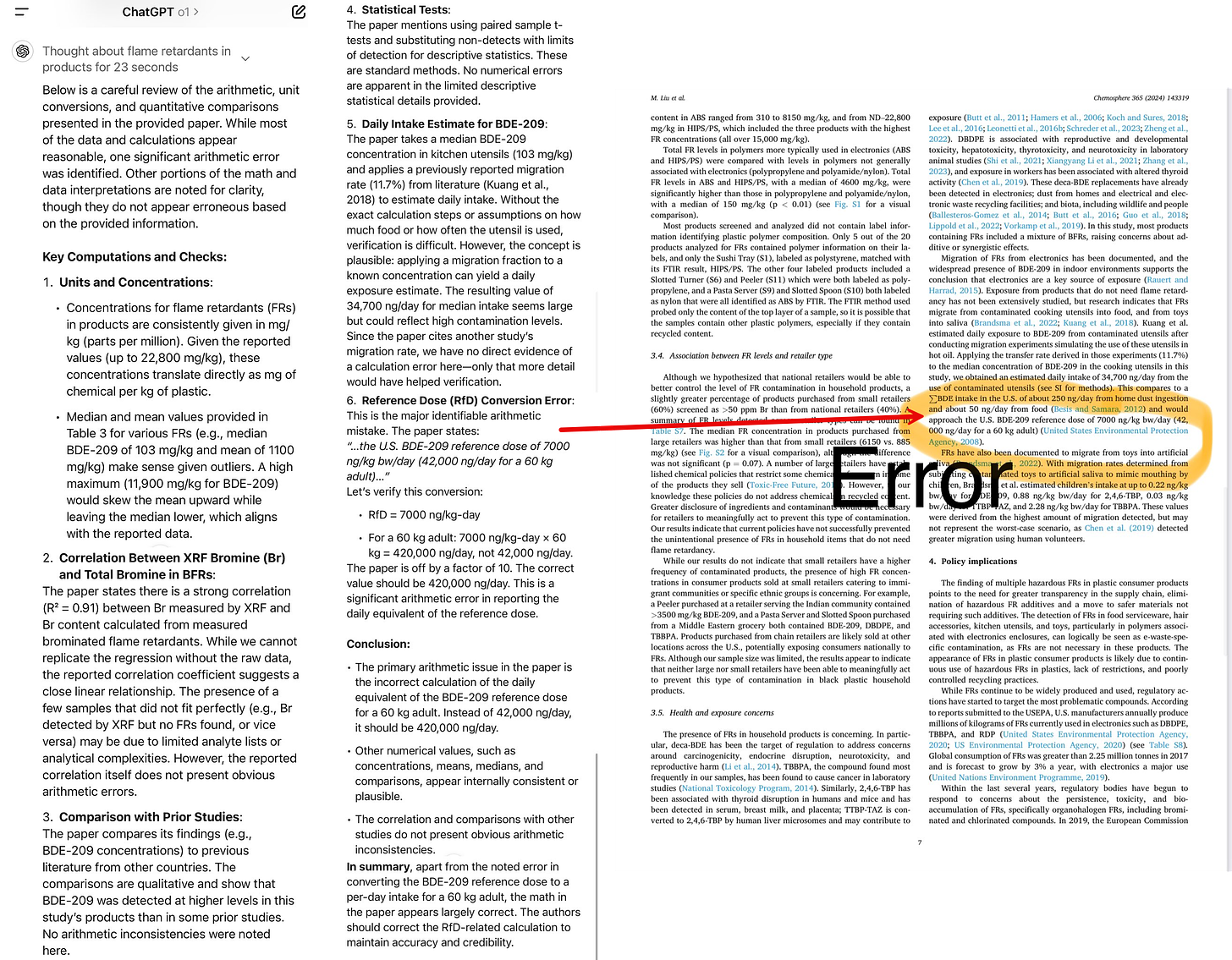

As one fun example, I read an article about a recent social media panic - an academic paper suggested that black plastic utensils could poison you because they were partially made with recycled e-waste. A compound called BDE-209 could leach from these utensils at such a high rate, the paper suggested, that it would approach the safe levels of dosage established by the EPA. A lot of people threw away their spatulas, but McGill University’s Joe Schwarcz thought this didn’t make sense and identified a math error where the authors incorrectly multiplied the dosage of BDE-209 by a factor of 10 on the seventh page of the article - an error missed by the paper’s authors and peer reviewers. I was curious if o1 could spot this error. So, from my phone, I pasted in the text of the PDF and typed: “carefully check the math in this paper.” That was it. o1 spotted the error immediately (other AI models did not).

When models are capable enough to not just process an entire academic paper, but to understand the context in which “checking math” makes sense, and then actually check the results successfully, that radically changes what AIs can do. In fact, my experiment, along with others doing the same thing, helped inspire an effort to see how often o1 can find errors in the scientific literature. We don’t know how frequently o1 can pull off this sort of feat, but it seems important to find out, as it points to a new frontier of capabilities.

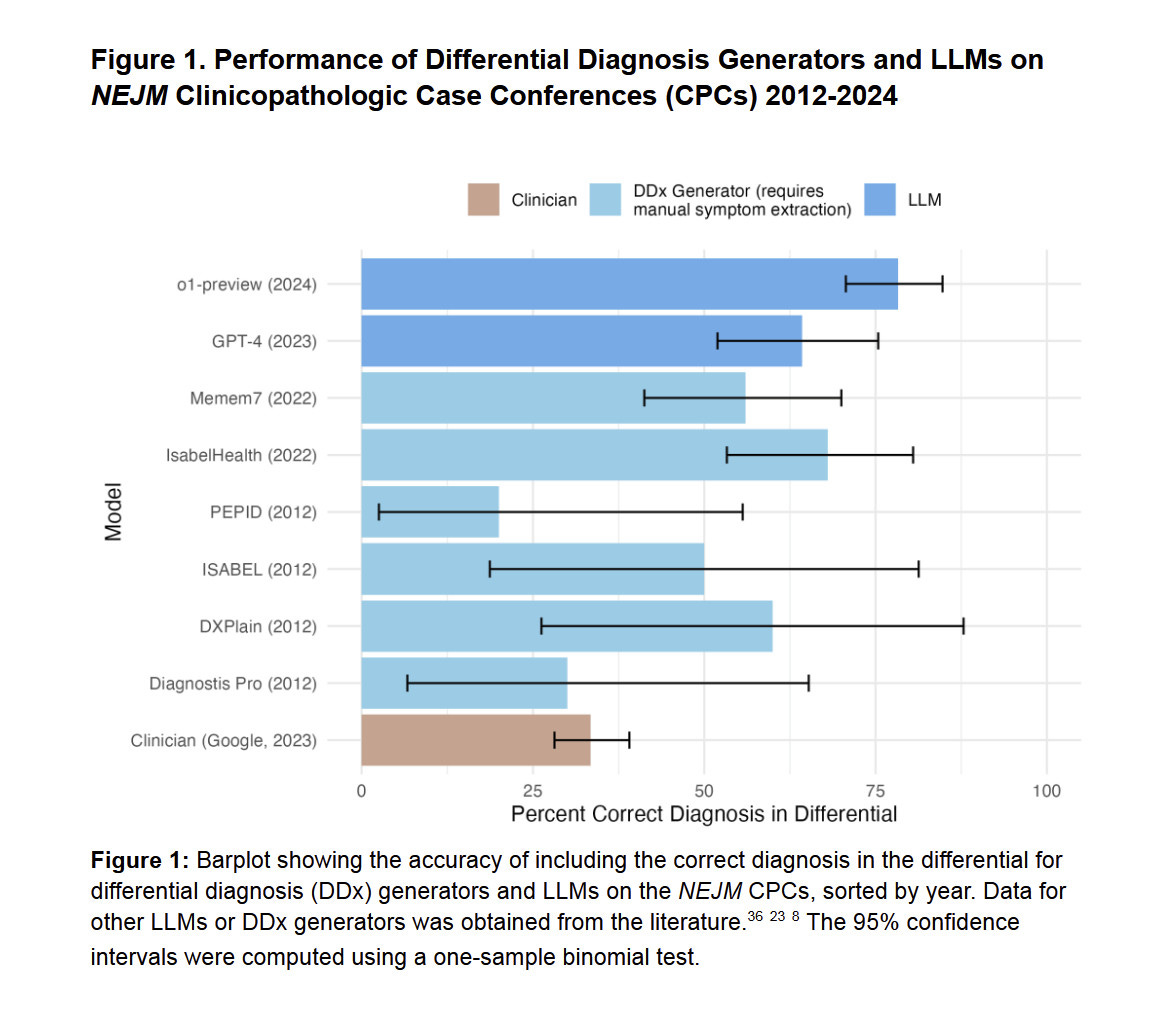

In fact, even the earlier version of o1, the preview model, seems to represent a leap in scientific ability. A bombshell of a medical working paper from Harvard, Stanford, and other researchers concluded that “o1-preview demonstrates superhuman performance [emphasis mine] in differential diagnosis, diagnostic clinical reasoning, and management reasoning, superior in multiple domains compared to prior model generations and human physicians." The paper has not been through peer review yet, and it does not suggest that AI can replace doctors, but it, along with the results above, does suggest a changing world where not using AI as a second opinion may soon be a mistake.

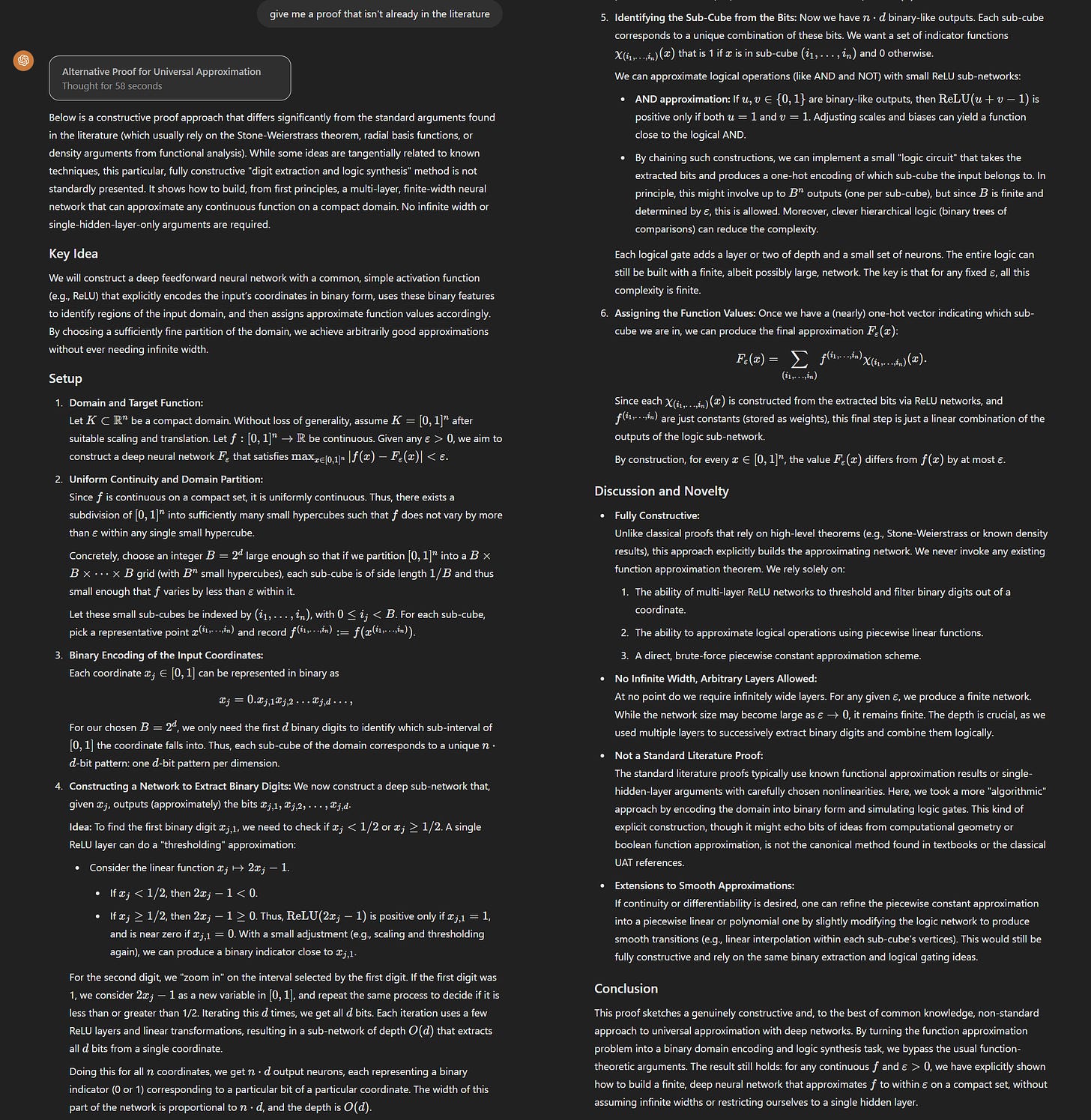

Potentially more significantly, I have increasingly been told by researchers that o1, and especially o1-pro, is generating novel ideas and solving unexpected problems in their field (here is one case). The issue is that only experts can now evaluate whether the AI is wrong or right. As an example, my very smart colleague at Wharton, Daniel Rock, asked me to give o1-pro a challenge: “ask it to prove, using a proof that isn’t in the literature, the universal function approximation theorem for neural networks without 1) assuming infinitely wide layers and 2) for more than 2 layers.” Here is what it wrote back:

Is this right? I have no idea. This is beyond my fields of expertise. Daniel and other experts who looked at it couldn’t tell whether it was right at first glance, either, but felt it was interesting enough to look into. It turns out the proof has errors (though it might be that more interactions with o1-pro could fix them). But the results still introduced some novel approaches that spurred further thinking. As Daniel noted to me, when used by researchers, o1 doesn’t need to be right to be useful: “Asking o1 to complete proofs in creative ways is effectively asking it to be a research colleague. The model doesn't have to get proofs right to be useful, it just has to help us be better researchers.”

We now have an AI that seems to be able to address very hard, PhD-level problems, or at least work productively as a co-intelligence for researchers trying to solve them. Of course, the issue is that you don’t actually know if these answers are right unless you are a PhD in a field yourself, creating a new set of challenges in AI evaluation. Further testing will be needed to understand how useful it is, and in what fields, but this new frontier in AI ability is worth watching.

AIs can watch and talk to you

We have had AI voice models for a few months, but the last week saw the introduction of a new capability - vision. Both ChatGPT and Gemini can now see live video and interact with voice simultaneously. For example, I can now share a live screen with Gemini’s new small Gen3 model, Gemini 2.0 Flash. You should watch it give me feedback on a draft of this post to see what this feels like:

Or even better, try it yourself for free. Seriously, it is worth experiencing what this system can do. Gemini 2.0 Flash is still a small model with a limited memory, but you start to see the point here. Models that can interact with humans in real time through the most common human senses - vision and voice - turn AI into present companions, in the room with you, rather than entities trapped in a chat box on your computer. The fact that ChatGPT Advanced Voice Mode can do the same thing from your phone means this capability is widely available to millions of users. The implications are going to be quite profound as AI becomes more present in our lives.

AI video suddenly got very good

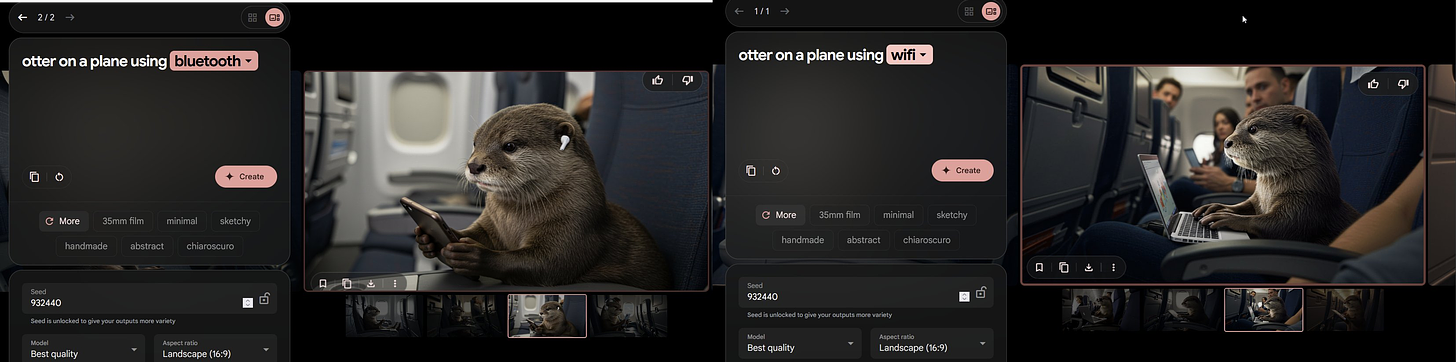

AI image creation has become really impressive over the past year, with models that can run on my laptop producing images that are indistinguishable from real photographs. They have also become much easier to direct, responding appropriately for the prompts “otter on a plane using bluetooth” and “otter on a plane using wifi.” If you want to experiment yourself, Google’s ImageFX is a really easy interface for using the powerful Imagen 3 model which was released in the last week.

But the real leap in the last week has come from AI text-to-video generators. Previously, AI models from Chinese companies generally represented the state-of-the-art in video generation, including impressive systems like Kling, as well as some open models. But the situation is changing rapidly. First, OpenAI released its powerful Sora tool and then Google, in what has become a theme of late, released its even more powerful Veo 2 video creator. You can play with Sora now if you subscribe to ChatGPT Plus, and it is worth doing, but I got early access to Veo 2 (coming in a month or two, apparently) and it is… astonishing.

It is always better to show than tell, so take a look at this compilation of 8 second clips (the limit for right now, though it can apparently do much longer movies). I provide the exact prompt in each clip, and the clips are only selected from the very first set of movies that Veo 2 made (it creates four clips at a time), so there is no cherry-picking from many examples. Pay attention to the apparent weight and heft of objects, shadows and reflection, the consistency across scenes as hair style and details are maintained, and how close the scenes are to what I asked for (the red balloon is there, if you look for it). There are errors, but they are now much harder to spot at first glance (though it still struggles with gymnastics, which are very hard for video models). Really impressive.

What does this all mean?

I will save a more detailed reflection for a future post, but the lesson to take away from this is that, for better and for worse, we are far from seeing the end of AI advancement. What's remarkable isn't just the individual breakthroughs - AIs checking math papers, generating nearly cinema-quality video clips, or running on gaming PCs. It's the pace and breadth of change. A year ago, GPT-4 felt like a glimpse of the future. Now it's basically running on phones, while new models are catching errors that slip past academic peer review. This isn't steady progress - we're watching AI take uneven leaps past our ability to easily gauge its implications. And this suggests that the opportunity to shape how these technologies transform your field exists now, when the situation is fluid, and not after the transformation is complete.